Prove How Much Time Your AI Saves.

We track every human edit to your AI outputs, then help you improve performance and prove ROI to your customers.

at NAACL and AAAI

You Can't Prove Your AI Works

Your customers ask "how much time does this actually save?" You don't have an answer.

Your AI runs in production, but you have no idea if it's improving or getting worse. When renewal time comes, you have dashboards full of latency charts but nothing that proves business value.

Your competitors will figure this out eventually. Get there first.

No Real Metrics

Latency and error rates don't tell you if your AI is saving time or creating work. You need human-level measurement.

No Proof for Customers

When your customers ask for ROI numbers, you scramble. Case studies are vague. Renewals are harder than they should be.

No Improvement Loop

Your model shipped and stopped improving. Without systematic feedback, you're guessing at what to fix.

We Measure Human Time. That Changes Everything.

Most AI analytics tools try to remove humans from the loop. We do the opposite.

FullOversight captures every human edit to AI outputs. We measure how long reviews take, track intervention rates over time, and calculate exactly how much time your AI saves.

The result: hard numbers like "124 hours saved this month" and "81% autonomy rate." Numbers your marketing team can put in a case study. Numbers your customers will believe.

Capture Every Edit

Human reviewers accept or edit AI outputs. Every interaction is logged automatically. No extra work required.

Measure Real Impact

See intervention rates, average edit time, and total time spent. Compare models side by side. Know exactly where your AI struggles.

Prove Business Value

Export time-saved metrics, accuracy trends, and reliability scores. Give your sales team proof that closes deals.

How It Works

Connect

Integrate FullOversight with your AI workflow. Human reviewers continue working as normal. We capture their edits automatically.

Measure

Track intervention rates, edit times, and acceptance rates in real time. Compare performance across models, prompts, and time periods.

Prove

Generate reports showing time saved, autonomy rates, and improvement trends. Use them in sales decks, case studies, and customer renewals.

Why Human Feedback is the Only Metric That Matters

AI benchmarks are synthetic. Latency metrics are table stakes. The only way to know if your AI actually works is to measure what humans do with it.

When a human accepts an output without editing, that's a real signal. When they spend 2 minutes fixing it, that's a real signal too. We turn those signals into metrics you can trust.

What Others Measure

- •Latency (ms)

- •Error rates

- •Token usage

- •Synthetic benchmarks

None of this tells you if your AI saves time.

What We Measure

- Human intervention rate

- Time spent per review

- Edit distance and patterns

- Total hours saved

This is what your customers actually care about.

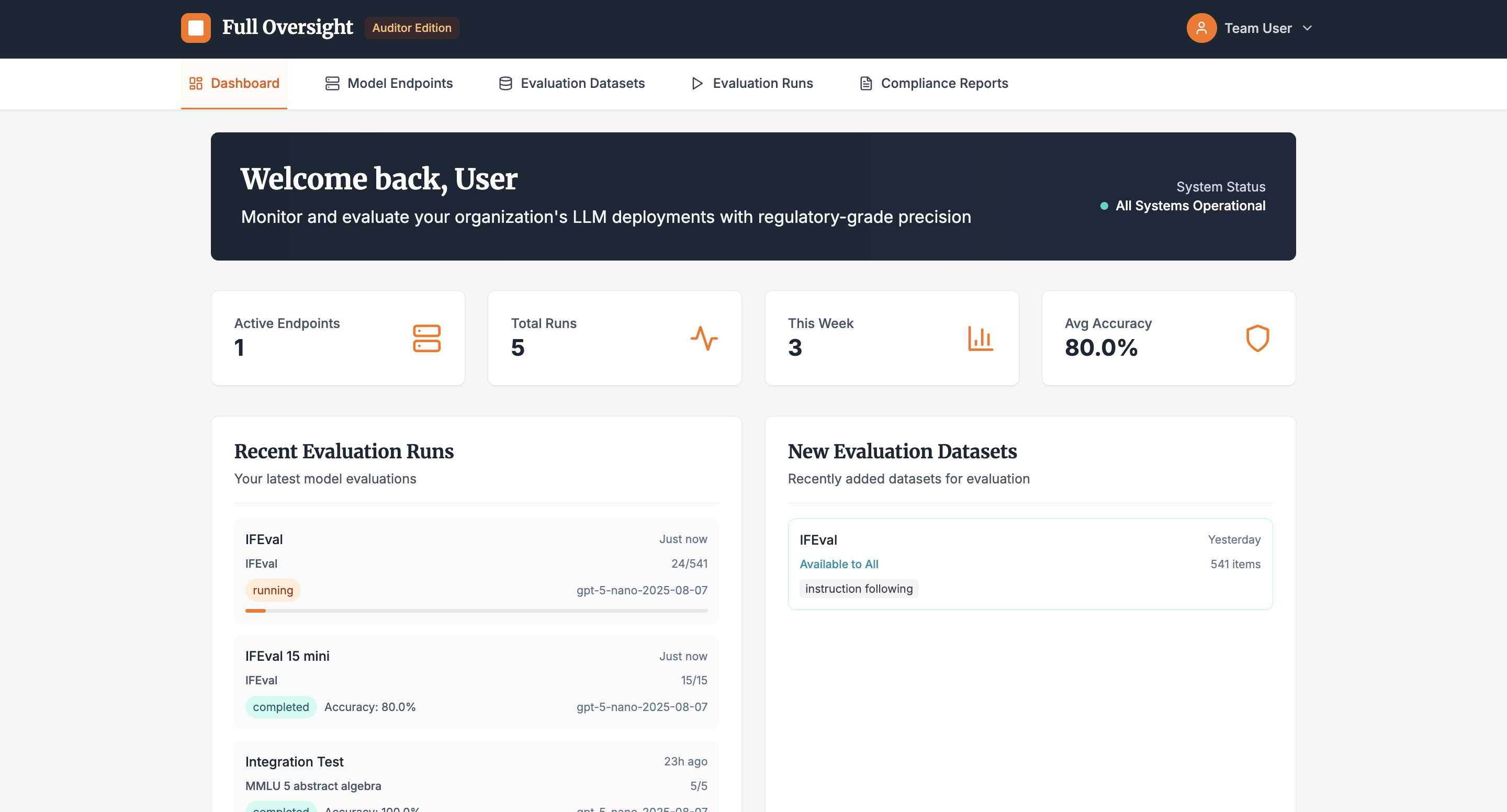

See It In Action

Explore a live demo with simulated data. See how FullOversight tracks human interventions, compares model performance, and calculates time savings.

Ready to Prove Your AI Works?

Get started with FullOversight. We'll help you set up human feedback capture and start measuring real impact within days.

Or email us directly at contact@fulloversight.com